# Randomly sample 500 values from a normal distribution

myvar <- rnorm(500, mean = 0, sd = 1)Bayesian Statistics

1. Distributions

D.Makowski@sussex.ac.uk

Distributions

A fundamental concept in Bayesian statistics

On Normal Distributions

Why do statisticians hate normal distributions? Because they are ‘mean’!

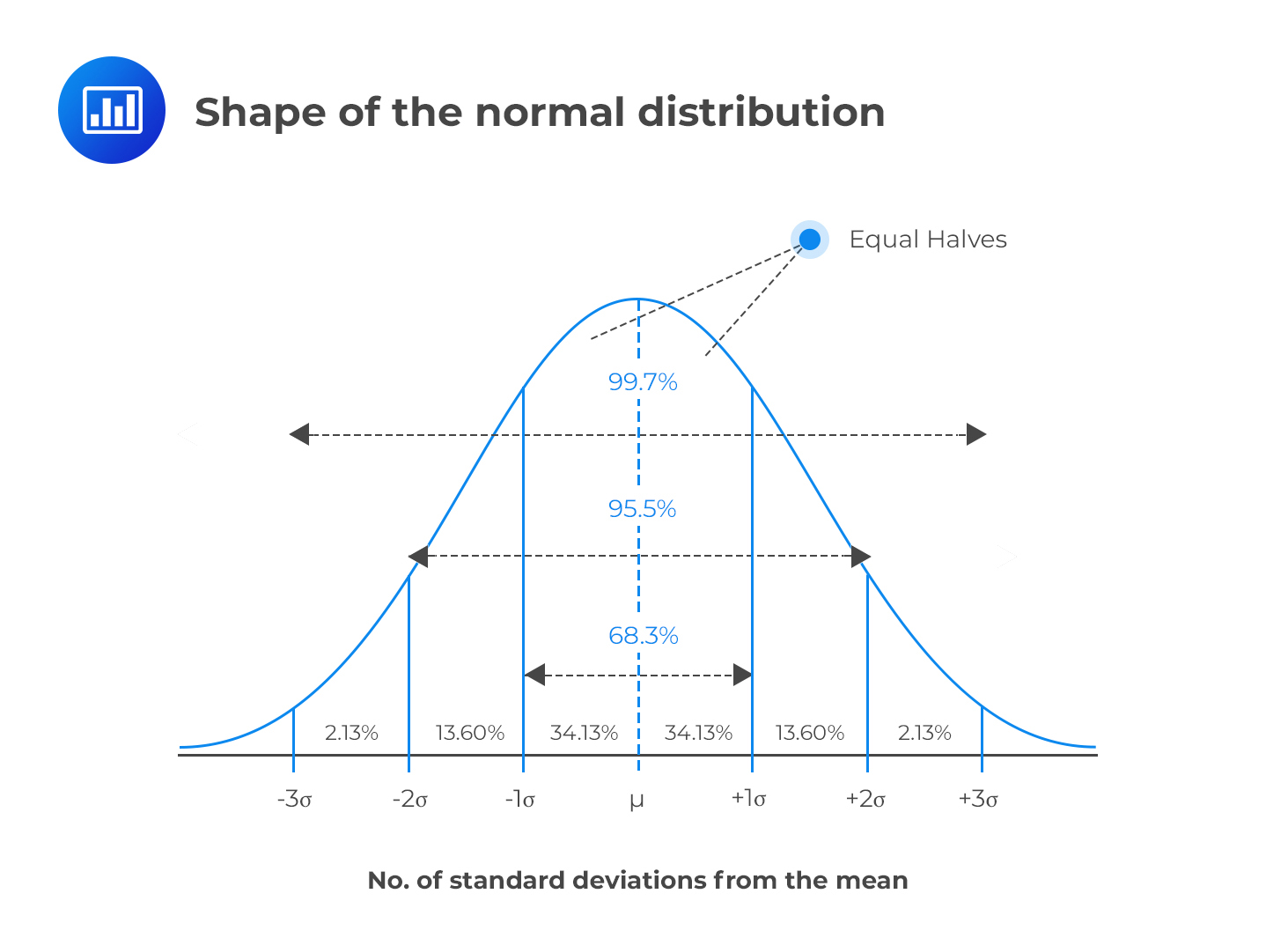

The “Bell” Curve

- The Normal Distribution is one of the most important distribution in statistics (spoiler alert: for reasons that we will see)

- What variables are normally distributed?

- It is defined by two parameters:

- Location (\(\mu\) “mu”)

- Deviation/spread (\(\sigma\) “sigma”)

- Properties:

- Symmetric

- Location = mean = median = mode

- Deviation = standard deviation (SD)

- ~68% of the data is within 1 SD of the mean, ~95% within 2 SD, ~99.7% within 3 SD

Note

A Normal distribution with \(\mu = 0\) and \(\sigma = 1\) is also called a z-Distribution (aka a Standard Normal Distribution)

What is a distribution?

- A distribution describes the probability of different outcomes

- E.g., the distribution of IQ represents the probability of encountering all the different IQ scores

- The probability is in this equivalent to the frequency (“in a group of 100 random people, how many have an IQ of > 130”)

- Distributions are defined by a set of parameters (e.g., location, deviation)

- It is an abstract and convenient way of describing the probability of various events using a limited number of parameters

- The area under the curve is equal to 1 (= describes all possible outcomes)

- The unit of the y-axis of a “density plot” is thus irrelevant (as it depends on the x-axis)

- Statistical software implement a variety of distributions, which can be used to e.g., randomly sample from them

- In R, the

r*()functions are used to draw random samples from distributions - E.g.,

rnorm()=random + normal

- In R, the

Density Estimation

- In practice, we rarely know the true distribution of our data

- Density estimation is the process of estimating the Probability Distribution of a variable

- This estimation is based on various assumptions that can be tweaked via arguments (e.g., method, kernel type, bandwidth etc.)

- The resulting density is just an estimation, and sometimes can be off

x <- rnorm(100000, mean = 0, sd = 1)

x_strictly_positive <- x[x >= 0]

ggplot(data.frame(x = x), aes(x = x, y = after_stat(count))) +

geom_vline(xintercept = 0, linetype = "dashed") +

geom_density(linewidth = 2) +

geom_density(data = data.frame(x = x_strictly_positive),

color = "red", linewidth = 1) +

theme_minimal()

Density Computation

- The

estimate_density()function returns a data frame with the estimated density - Contains two columns,

x(possible values of the variable) andy(its associated probability)

How to visualize a distribution? (1)

How to visualize a distribution? (2)

- Empirical distributions (i.e., the distribution of the data at hand) is often represented using histograms

- Histograms also depends on some parameters, such as the number of bins or the bin width

- Like density estimates, it can be inaccurate and give a distorted view of the data

Probability Density Function (PDF)

- As we have seen, we can estimate the probability density of a random variable (e.g., a sample of data) and visualize it using a density plot or a histogram

- Most common distributions have an analytical solution (i.e., a formula) to compute the probability density over a range of values.

- It is called the Probability Density Function (PDF) and can be obtained using the

d*()functions- E.g.,

dnorm()=density + normal - It requires a vector of values (

x), and the parameters of the distribution (e.g.,mean,sd)

- E.g.,

Visualizing

- These two vectors represent the x-axis and the y coordinates of the density line

- In order to plot it, we need to create a “temporary” data frame holding these two vectors as columns

Exercice!

- Increase the number of more points for a smoother density plot

- Make the line orange

Understand the parameters

- Gain an intuitive sense of the parameters’ impact

- Location changes the center of the distribution

- Scale changes the width of the distribution

Show code

df_norm <- data.frame()

for (u in c(0, 2.5, 5)) {

for (sd in c(1, 2, 3)) {

dat <- data.frame(x = seq(-10, 10, length.out = 200))

dat$p <- dnorm(dat$x, mean = u, sd = sd)

dat$distribution <- "Normal"

dat$parameters <- paste0("mean = ", u, ", SD = ", sd)

df_norm <- rbind(dat, df_norm)

}

}

p <- df_norm |>

ggplot(aes(x = x, y = p, group = parameters)) +

geom_vline(xintercept = 0, linetype = "dashed") +

geom_line(aes(color = distribution), linewidth = 2, color = "#2196F3") +

theme_minimal() +

theme(

legend.position = "none",

axis.title.y = element_blank(),

plot.title = element_text(size = 15, face = "bold", hjust = 0.5),

plot.tag = element_text(size = 20, face = "bold", hjust = 0.5),

strip.text = element_text(size = 15, face = "bold")

)

p + facet_wrap(~parameters)

Quizz

- What are the parameters that govern a normal distribution?

- What is the difference between

rnorm()anddnorm()? - Density estimation and histograms always gives an accurate representation of the data

- You cannot change the SD without changing the mean of a normal distribution

- A variable is drawn from \(Normal(\mu=3, \sigma=1)\):

- What is the most likely value?

- What is the probability that the value is lower than 3?

- The bulk of the values (~99.9%) will fall between which two values?

- My friend does a test of attractiveness and gets a score of 10.

- He says “I am very attractive!” Is it true?

- What is the most plausible score you could give to a stranger that you never saw?

On Binomial Distributions

Why did the binomial distribution break up with its partner? Because it found their relationship too binary

Binomial Distribution

- The Binomial distribution models the probability of a series of binary outcome trials (e.g., successes/failures, heads/tails, etc.)1

- It is defined by two parameters:

size(number of trials) andprob(probability of “success”)2

Random draws

- Let’s draw 10 times 1 trial with a probability of 0.5

- The “probability” argument changes the ratio of 0s and 1s

Show plot code

library(gganimate)

df_binom <- data.frame()

for (p in c(1 / 3, 1 / 2, 2 / 3)) {

dat <- data.frame()

for (i in 1:1000) {

rez <- rbinom(1, size = 1, prob = p)

dat2 <- data.frame(Zero = 0, One = 0, i = i)

dat2$Zero[rez == 0] <- 1

dat2$One[rez == 1] <- 1

dat2$distribution <- "Binomial"

dat2$parameters <- paste0("p = ", insight::format_value(p))

dat <- rbind(dat, dat2)

}

dat$Zeros <- cumsum(dat$Zero)

dat$Ones <- cumsum(dat$One)

df_binom <- rbind(df_binom, dat)

}

df_binom <- pivot_longer(

df_binom,

c("Zeros", "Ones"),

names_to = "Outcome",

values_to = "Count"

) |>

mutate(Outcome = factor(Outcome, levels = c("Zeros", "Ones")))

anim <- df_binom |>

ggplot(aes(x = Outcome, y = Count, group = parameters)) +

geom_bar(aes(fill = parameters), stat = "identity", position = "dodge") +

theme_minimal() +

labs(title = 'N events: {frame_time}', tag = "Binomial Distribution") +

transition_time(i)

anim_save(

"img/binomial.gif",

animate(anim, height = 500, width = 1000, fps = 10)

)

Binomial = Sampling from a list of 0s and 1s

- Another way of sampling from a binomial distribution is simply to randomly sample from a list of 0s and 1s

Random Walk

- Mr. Brown1 is walking through a crowd. Every second, he decides to move to the left or to the right to avoid bumping into random people

- This type of trajectory can be modeled as a random walk. The probability of moving to the left or to the right at each step is 0.5 (50% left and 50% right)

- We start at the location “0”, then it can go to “1” (+1), “2” (+1), “1” (-1), etc.

- In R, a random walk can be simulated by randomly sampling from from 1s and -1s and then cumulatively summing them2

Visualize a random walk (1)

- Creating function is very useful to avoid repeating code, and it is also good to think about your code in terms of encapsulated bits.

- It is also useful for reproducibility and generalization (you can often re-use useful functions in other projects)

Visualize a random walk (2)

Exercice!

- We want to simulate 20 different random walks and visualize them as different colors

- What functions will we need to use?

Tip

- “Do X 20 times” = loop over a sequence of iterations with

for(i in 1:20) {...} - Because ggplot takes a dataframe, we will need all the data from the 20 runs in one single dataframe

- The

data.frame()function can be used to initialize an empty data frame - The

rbind()function can be used to concatenate data frames vertically

Solution (1)

- Let’s simulate 20 different random walks

data <- data.frame() # Initialize empty data frame

for (i in 1:20) {

walk_data <- data.frame(

trajectory = random_walk(10),

time = seq(0, 10),

iteration = i

)

data <- rbind(data, walk_data)

}

data trajectory time iteration

1 0 0 1

2 -1 1 1

3 -2 2 1

4 -1 3 1

5 0 4 1

6 1 5 1

7 0 6 1

8 1 7 1

9 0 8 1

10 -1 9 1

11 0 10 1

12 0 0 2

13 -1 1 2

14 0 2 2

15 1 3 2

16 2 4 2

17 3 5 2

18 2 6 2

19 3 7 2

20 4 8 2

21 5 9 2

22 6 10 2

23 0 0 3

24 1 1 3

25 0 2 3

26 -1 3 3

27 0 4 3

28 -1 5 3

29 0 6 3

30 1 7 3

31 0 8 3

32 -1 9 3

33 0 10 3

34 0 0 4

35 1 1 4

36 2 2 4

37 1 3 4

38 0 4 4

39 1 5 4

40 2 6 4

41 3 7 4

42 4 8 4

43 5 9 4

44 6 10 4

45 0 0 5

46 -1 1 5

47 0 2 5

48 1 3 5

49 2 4 5

50 3 5 5

51 2 6 5

52 1 7 5

53 0 8 5

54 1 9 5

55 2 10 5

56 0 0 6

57 -1 1 6

58 -2 2 6

59 -1 3 6

60 0 4 6

61 1 5 6

62 0 6 6

63 -1 7 6

64 -2 8 6

65 -1 9 6

66 -2 10 6

67 0 0 7

68 1 1 7

69 0 2 7

70 1 3 7

71 0 4 7

72 -1 5 7

73 0 6 7

74 1 7 7

75 0 8 7

76 -1 9 7

77 -2 10 7

78 0 0 8

79 1 1 8

80 2 2 8

81 1 3 8

82 2 4 8

83 3 5 8

84 4 6 8

85 3 7 8

86 2 8 8

87 1 9 8

88 0 10 8

89 0 0 9

90 1 1 9

91 0 2 9

92 -1 3 9

93 -2 4 9

94 -3 5 9

95 -4 6 9

96 -3 7 9

97 -2 8 9

98 -1 9 9

99 0 10 9

100 0 0 10

101 -1 1 10

102 0 2 10

103 1 3 10

104 2 4 10

105 3 5 10

106 2 6 10

107 1 7 10

108 2 8 10

109 1 9 10

110 0 10 10

111 0 0 11

112 1 1 11

113 2 2 11

114 1 3 11

115 0 4 11

116 -1 5 11

117 0 6 11

118 -1 7 11

119 0 8 11

120 -1 9 11

121 0 10 11

122 0 0 12

123 1 1 12

124 2 2 12

125 1 3 12

126 2 4 12

127 1 5 12

128 0 6 12

129 1 7 12

130 0 8 12

131 1 9 12

132 2 10 12

133 0 0 13

134 1 1 13

135 0 2 13

136 -1 3 13

137 0 4 13

138 -1 5 13

139 -2 6 13

140 -3 7 13

141 -4 8 13

142 -3 9 13

143 -2 10 13

144 0 0 14

145 1 1 14

146 2 2 14

147 3 3 14

148 2 4 14

149 1 5 14

150 0 6 14

151 1 7 14

152 0 8 14

153 -1 9 14

154 0 10 14

155 0 0 15

156 -1 1 15

157 -2 2 15

158 -1 3 15

159 -2 4 15

160 -3 5 15

161 -2 6 15

162 -3 7 15

163 -4 8 15

164 -3 9 15

165 -2 10 15

166 0 0 16

167 1 1 16

168 2 2 16

169 1 3 16

170 2 4 16

171 1 5 16

172 0 6 16

173 -1 7 16

174 -2 8 16

175 -3 9 16

176 -2 10 16

177 0 0 17

178 -1 1 17

179 0 2 17

180 -1 3 17

181 -2 4 17

182 -1 5 17

183 -2 6 17

184 -1 7 17

185 0 8 17

186 1 9 17

187 0 10 17

188 0 0 18

189 1 1 18

190 0 2 18

191 -1 3 18

192 0 4 18

193 1 5 18

194 0 6 18

195 1 7 18

196 2 8 18

197 3 9 18

198 2 10 18

199 0 0 19

200 -1 1 19

201 0 2 19

202 -1 3 19

203 -2 4 19

204 -3 5 19

205 -4 6 19

206 -3 7 19

207 -4 8 19

208 -5 9 19

209 -6 10 19

210 0 0 20

211 1 1 20

212 2 2 20

213 1 3 20

214 2 4 20

215 3 5 20

216 4 6 20

217 5 7 20

218 6 8 20

219 5 9 20

220 6 10 20Solution (2)

Solution (3)

- The

coloraesthetic needs to be converted to a factor to be interpreted as a discrete variable

Make it nicer

- We can increase the steps do make it more interesting

Show plot code

data <- data.frame() # Initialize empty data frame

for (i in 1:20) {

walk_data <- data.frame(

trajectory = random_walk(500),

time = seq(0, 500),

iteration = i

)

data <- rbind(data, walk_data)

}

data |>

mutate(iteration = as.factor(iteration)) |>

ggplot(aes(x = time, y = trajectory, color = iteration)) +

geom_line(linewidth = 0.5) +

labs(y = "Position", x = "Time") +

scale_color_viridis_d(option = "inferno", guide = "none") +

see::theme_blackboard() +

coord_flip()

Quizz

- A Binomial distributions deals with discrete, binary outcomes

- Tossing a coin (heads vs. tails) enacts a Binomial distribution

- A Random Walk is the successive sum of random binary outcomes

- What is the distribution of the result (arrival position) of a Random Walk?

Exercice!

- Run the random walk simulation with 10000 steps and 200 steps

- Visualize the distribution of the final positions (at time = 200) using a histogram

Simulation

Show figure code

library(gganimate)

set.seed(1)

data <- data.frame()

all_iter <- data.frame()

distr <- data.frame()

for (i in 1:100) {

walk_data <- data.frame(

trajectory = random_walk(99),

time = seq(0, 99),

group = i,

iteration = NA

)

data <- rbind(data, walk_data)

data$iteration <- i

max_t <- max(walk_data$time)

distr_data <- filter(data, time == max_t)$trajectory |>

bayestestR::estimate_density(precision = 256) |>

mutate(

y = datawizard::rescale(y, to = c(max_t, max_t + max_t / 3)),

iteration = i

)

distr <- rbind(distr, distr_data)

all_iter <- rbind(all_iter, data)

}

df <- all_iter |>

mutate(iteration = as.factor(iteration), group = as.factor(group))

p <- df |>

# filter(iteration == 100) |>

ggplot() +

geom_line(

aes(x = time, y = trajectory, color = group),

alpha = 0.7,

linewidth = 1.3

) +

geom_ribbon(

data = distr,

aes(xmin = max(all_iter$time), xmax = y, y = x),

fill = "#FF9800"

) +

geom_point(

data = filter(df, time == max(time)),

aes(x = time, y = trajectory, color = group),

size = 5,

alpha = 0.2

) +

labs(y = "Position", x = "Time", title = "N random walks: {frame}") +

scale_color_viridis_d(option = "turbo", guide = "none") +

see::theme_blackboard() +

theme(plot.title = element_text(hjust = 0.5, size = 15, face = "bold")) +

coord_flip()

# p

anim <- p + transition_manual(iteration)

gganimate::anim_save(

"img/random.gif",

animate(

anim,

height = 1000,

width = 1000,

fps = 7,

end_pause = 7 * 4,

duration = 20

)

)

🤯🤯🤯🤯🤯🤯🤯🤯

A NORMAL DISTRIBUTION

🤯🤯🤯🤯🤯🤯🤯🤯

- How is it possible than a complex distribution emerges from such a simple process of random binary choices?

Central Limit Theorem (1)

- Formula of the Normal Distribution:

\(f(x) = \frac{1}{\sigma\sqrt{2\pi}} e^{-\frac{1}{2}\left(\frac{x-\mu}{\sigma}\right)^2}\) - Despite its (relative) complexity, the Normal distribution naturally emerges from very simple processes!

- This is known as the Central Limit Theorem, which states that the distribution of the sums/means of many random variables tends to a Normal distribution

- This is why the Normal distribution is so ubiquitous in nature and statistics!

- Because many measurements are the amalgamation result of many random mechanisms

On Uniform Distributions

Why did the uniform distribution get hired as a referee? Because it always calls it fair and square, giving every player an equal chance!

Uniform Distribution

- The Uniform distribution is the simplest distribution

- It is defined by two parameters: a lower and upper bound

- All values between the bounds are equally likely (the PDF is flat)

- Exercice: generate 50,000 random values between -10 and 10 and plot the histogram

- Tip: use

runif()

- Tip: use

Uniform Distribution - Applications

-

- E.g., to jitter the Inter-Stimulus Intervals, to randomly select between various conditions, etc.

-

- Can be used when we want to make no assumptions1 about the distribution of the data

On Beta Distributions

Why do beta distributions love to go to the gym? So that they are not out of shape!

Beta Distribution

- The Beta distribution can be used to model probabilities

- Defined by two shape parameters, α and β (

shape1&shape2)- Note it can also be expressed via other, more convenient, parametrizations. Often, in Beta-regression models, the Beta distribution is set by a location \(\mu\) (mean) and scale \(\phi\) parameters.

- Note it can also be expressed via other, more convenient, parametrizations. Often, in Beta-regression models, the Beta distribution is set by a location \(\mu\) (mean) and scale \(\phi\) parameters.

- Only expressed in the range \(]0, 1[\) (i.e., null outside of this range)

Beta Distribution - Parameters

- \(\alpha\) (

shape1) and \(\beta\) (shape2) parameters control the shape of the distribution - \(\alpha\) pushes the distribution to the right, \(\beta\) to the left

- When \(\alpha = \beta\), the distribution is symmetric

Show figure code

df_beta <- data.frame()

for (shape1 in c(1, 2, 3, 6)) {

for (shape2 in c(1, 2, 3, 6)) {

dat <- data.frame(x = seq(-0.25, 1.25, 0.0001))

dat$p <- dbeta(dat$x, shape1 = shape1, shape2 = shape2)

dat$distribution <- "Beta"

dat$parameters <- paste0("alpha = ", shape1, ", beta = ", shape2)

df_beta <- rbind(dat, df_beta)

}

}

p <- df_beta |>

ggplot(aes(x = x, y = p, group = parameters)) +

geom_vline(xintercept = c(0, 1), linetype = "dashed") +

geom_line(aes(color = distribution), linewidth = 2, color = "#4CAF50") +

theme_minimal() +

theme(

legend.position = "none",

axis.title.y = element_blank(),

plot.title = element_text(size = 15, face = "bold", hjust = 0.5),

plot.tag = element_text(size = 20, face = "bold", hjust = 0.5),

strip.text = element_text(size = 15, face = "bold")

) +

facet_wrap(~parameters, scales = "free_y")

p

Beta Distribution - Applications

-

- E.g., proportion of time a patient exhibits a certain behavior

On Gamma Distributions

Gamma distributions receive many compliments about their long tail. That’s why there are always positive!

Gamma Distribution

- Defined by a shape parameter k and a scale parameter θ (theta)

- Only expressed in the range \([0, +\infty [\) (i.e., non-negative)

- Left-skewed distribution

- Used to model continuous non-negative data (e.g., time)

Gamma Distribution - Parameters

- \(k\) (

shape) and \(\theta\) (scale) parameters control the shape of the distribution - When \(k = 1\), it is equivalent to an exponential distribution

Show figure code

df_gamma <- data.frame()

for (shape in c(0.5, 1, 2, 4)) {

for (scale in c(0.1, 0.5, 1, 2)) {

dat <- data.frame(x = seq(0.001, 25, length.out = 1000))

dat$p <- dgamma(dat$x, shape = shape, scale = scale)

dat$distribution <- "Beta"

dat$parameters <- paste0("k = ", shape, ", theta = ", scale)

df_gamma <- rbind(dat, df_gamma)

}

}

p <- df_gamma |>

ggplot(aes(x = x, y = p, group = parameters)) +

# geom_vline(xintercept=c(0, 1), linetype="dashed") +

geom_line(aes(color = distribution), linewidth = 2, color = "#9C27B0") +

theme_minimal() +

theme(

legend.position = "none",

axis.title.y = element_blank(),

plot.title = element_text(size = 15, face = "bold", hjust = 0.5),

plot.tag = element_text(size = 20, face = "bold", hjust = 0.5),

strip.text = element_text(size = 15, face = "bold")

) +

facet_wrap(~parameters, scales = "free_y")

p

Exercice!

- Make groups. Each groups picks a distribution (Normal, Uniform, Beta, Gamma) and a set of parameters

- Then:

- Draw 100 random samples from that distribution

- Compute the mean of each random subset

- Store results in a vector

- Repeat 10,000 times

- Plot the distribution of the means

Solution

- What does yours look like?

They all look like…

🤯🤯🤯🤯🤯🤯🤯🤯

A NORMAL DISTRIBUTION

🤯🤯🤯🤯🤯🤯🤯🤯

Central Limit Theorem (2)

- The Central Limit Theorem hits gain: “the distribution of the means of any sample approximates a normal distribution as the sample size gets larger, regardless of the population’s distribution”

- Practical Implications: The Central Limit Theorem is crucial for inferential statistics. It underpins many statistical methods, such as frequentist hypothesis testing and confidence intervals. It allows for the use of normal probability models to make inferences about population parameters even when the population distribution is not normal.

- Standard Error (SE) vs. Standard Deviation (SD)

- The standard deviation is a measure of the variability of a single sample of observations

- The standard error is a measure of the variability of many sample means (it is the SD of the averages of many samples drawn from the same parent distribution). The SE is often assumed to be normally distributed (even if the underlying distribution is not normal), which is backed by the CLT.

On Cauchy Distributions

Why don’t statisticians play hide and seek with Cauchy distributions? Because they never know where they’re going to show up and how far away they could be!

Cauchy Distribution

- The Cauchy distribution is known for its “heavy tails” (aka “fat tails”)

- Characterized by a location parameter (the median) and a scale parameter (the spread)

- The Cauchy distribution is one notable exception to the Central Limit Theorem (CLT): the distribution of the sample means of a Cauchy distribution remains a Cauchy distribution (instead of Normal). This is because the heavy tails of the Cauchy distribution significantly influence the sample mean, preventing it from settling into a normal distribution.

On t-Distributions

How do you call the PhD diploma of a Student’s t-distribution? A degree of freedom!

t-Distribution

- Both Cauchy and Normal are extreme cases of the Student’s t-distribution

- Student’s t-distribution becomes the Cauchy distribution when the degrees of freedom is equal to one and converges to the normal distribution as the degrees of freedom go to infinity

- Defined by its degrees of freedom \(df\) (location and scale usually fixed to 0 and 1)

- Tends to have heavier tails than the normal distribution (but less than Cauchy)

Show figure code

df <- data.frame(x = seq(-5, 5, length.out = 1000))

df$Normal <- dnorm(df$x, mean = 0, sd = 1)

df$Cauchy <- dcauchy(df$x, location = 0, scale = 1)

df$Student_1.5 <- dt(df$x, df = 1.5)

df$Student_2 <- dt(df$x, df = 2)

df$Student_3 <- dt(df$x, df = 3)

df$Student_5 <- dt(df$x, df = 5)

df$Student_10 <- dt(df$x, df = 10)

df$Student_20 <- dt(df$x, df = 20)

df |>

pivot_longer(

starts_with("Student"),

names_to = "Distribution",

values_to = "PDF"

) |>

separate(Distribution, into = c("Distribution", "df"), sep = "_") |>

mutate(label = paste0("Student (df=", df, ")")) |>

ggplot(aes(x = x)) +

geom_line(

data = df,

aes(y = Normal, color = "Normal (0, 1)"),

linewidth = 2

) +

geom_line(

data = df,

aes(y = Cauchy, color = "Cauchy (0, 1)"),

linewidth = 2

) +

geom_line(aes(y = PDF, color = label)) +

scale_color_manual(

values = c(

`Cauchy (0, 1)` = "#F44336",

`Student (df=1.5)` = "#FF5722",

`Student (df=2)` = "#FF9800",

`Student (df=3)` = "#FFC107",

`Student (df=5)` = "#FFEB3B",

`Student (df=10)` = "#CDDC39",

`Student (df=20)` = "#8BC34A",

`Normal (0, 1)` = "#2196F3"

),

breaks = c(

"Normal (0, 1)",

"Student (df=20)",

"Student (df=10)",

"Student (df=5)",

"Student (df=3)",

"Student (df=2)",

"Student (df=1.5)",

"Cauchy (0, 1)"

)

) +

see::theme_modern() +

theme(

axis.title.x = element_blank(),

axis.title.y = element_blank(),

axis.ticks.y = element_blank(),

plot.title = element_text(size = 15, face = "bold", hjust = 0.5),

strip.text = element_text(size = 15, face = "bold")

) +

labs(title = "Normal, Cauchy, and Student's t-Distribution", color = NULL)

t-Distribution - Applications

-

- E.g., comparing the means of two groups in an experiment

Marginal Distributions

- What is the distribution of

var1alone (i.e;, its marginal distribution)? - What is the marginal distribution of

var2?

Quizz

- What are the marginal distributions of

var1andvar2?

Quizz

- What are the marginal distributions of

var1andvar2?

Quizz

Quizz

The End (for now)

Thank you!