The Data

For this chapter, we will be using the data from Wagenmakers et al., (2008) - Experiment 1 also reanalyzed by Heathcote & Love (2012), that contains responses and response times for several participants in two conditions (where instructions emphasized either speed or accuracy). Using the same procedure as the authors, we excluded all trials with uninterpretable response time, i.e., responses that are too fast (<200 ms instead of <180 ms) or too slow (>2 sec instead of >3 sec).

set.seed(123) # For reproducibility

df <- read.csv("https://raw.githubusercontent.com/DominiqueMakowski/CognitiveModels/main/data/wagenmakers2008.csv")

df <- df[df$RT > 0.2 & df$Participant %in% c(1, 2, 3), ]

# Show 10 first rows

head(df, 10)

#> Participant Condition RT Error Frequency

#> 1 1 Speed 0.700 FALSE Low

#> 2 1 Speed 0.392 TRUE Very Low

#> 3 1 Speed 0.460 FALSE Very Low

#> 4 1 Speed 0.455 FALSE Very Low

#> 5 1 Speed 0.505 TRUE Low

#> 6 1 Speed 0.773 FALSE High

#> 7 1 Speed 0.390 FALSE High

#> 8 1 Speed 0.587 TRUE Low

#> 9 1 Speed 0.603 FALSE Low

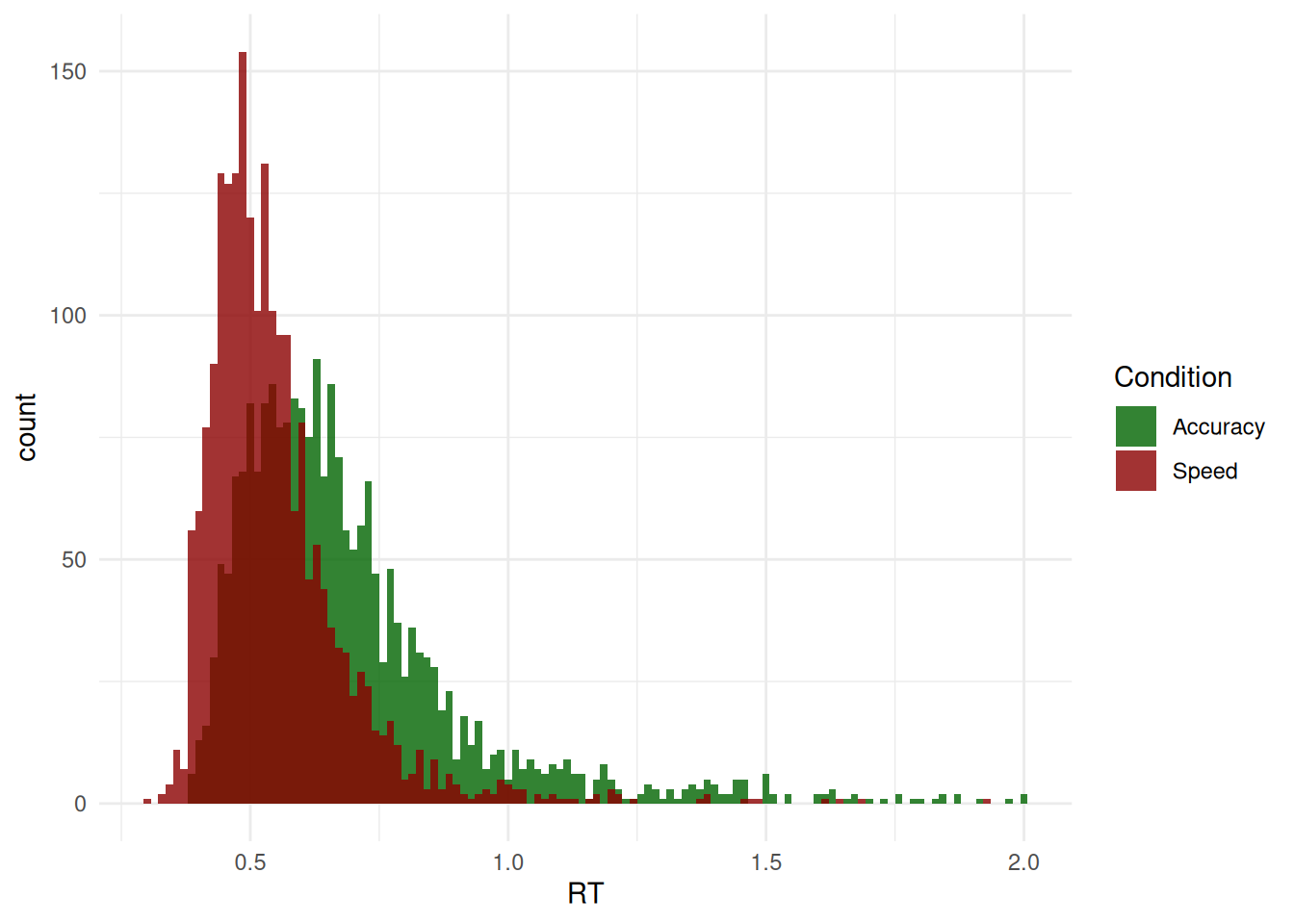

#> 10 1 Speed 0.435 FALSE HighWe are going to first take interest in the response times (RT) of Correct answers only (as we can assume that errors are underpinned by a different generative process).

df <- df[df$Error == 0, ]

ggplot(df, aes(x = RT, fill = Condition)) +

geom_histogram(bins = 120, alpha = 0.8, position = "identity") +

scale_fill_manual(values = c("darkgreen", "darkred")) +

theme_minimal()

Models

Normal

A basic linear model.

Code

f <- bf(

RT ~ Condition

)

m_normal <- brm(f,

data = df,

init = 0,

chains = 4, iter = 500, backend = "cmdstanr"

)

m_normal <- brms::add_criterion(m_normal, "loo")

saveRDS(m_normal, file = "models/m_normal.rds")ExGaussian

Code

f <- bf(

RT ~ Condition,

sigma ~ Condition,

beta ~ Condition,

family = exgaussian()

)

m_exgauss <- brm(f,

data = df,

family = exgaussian(),

init = 0,

chains = 4, iter = 500, backend = "cmdstanr"

)

m_exgauss <- brms::add_criterion(m_exgauss, "loo")

saveRDS(m_exgauss, file = "models/m_exgauss.rds")Shifted LogNormal

Code

f <- bf(

RT ~ Condition,

sigma ~ Condition,

tau ~ Condition,

minrt = min(df$RT),

family = rt_lognormal()

)

priors <- brms::set_prior("normal(0, 1)", class = "Intercept", dpar = "tau") |>

brms::validate_prior(f, data = df)

m_lognormal <- brm(

f,

prior = priors,

data = df,

stanvars = rt_lognormal_stanvars(),

init = 0,

chains = 4, iter = 500, backend = "cmdstanr"

)

m_lognormal <- brms::add_criterion(m_lognormal, "loo")

saveRDS(m_lognormal, file = "models/m_lognormal.rds")Inverse Gaussian (Shifted Wald)

Code

f <- bf(

RT ~ Condition,

bs ~ Condition,

tau ~ Condition,

minrt = min(df$RT),

family = rt_invgaussian()

)

priors <- brms::set_prior("normal(0, 1)", class = "Intercept", dpar = "tau") |>

brms::validate_prior(f, data = df)

m_wald <- brm(

f,

prior = priors,

data = df,

stanvars = rt_invgaussian_stanvars(),

init = 0,

chains = 4, iter = 500, backend = "cmdstanr"

)

m_wald <- brms::add_criterion(m_wald, "loo")

saveRDS(m_wald, file = "models/m_wald.rds")Weibull

Code

f <- bf(

RT ~ Condition,

sigma ~ Condition,

tau ~ Condition,

minrt = min(df$RT),

family = rt_weibull()

)

priors <- brms::set_prior("normal(0, 1)", class = "Intercept", dpar = "tau") |>

brms::validate_prior(f, data = df)

m_weibull <- brm(

f,

prior = priors,

data = df,

stanvars = rt_weibull_stanvars(),

init = 0,

chains = 4, iter = 500, backend = "cmdstanr"

)

m_weibull <- brms::add_criterion(m_weibull, "loo")

saveRDS(m_weibull, file = "models/m_weibull.rds")LogWeibull (Shifted Gumbel)

Code

f <- bf(

RT ~ Condition,

sigma ~ Condition,

tau ~ Condition,

minrt = min(df$RT),

family = rt_logweibull()

)

priors <- brms::set_prior("normal(0, 1)", class = "Intercept", dpar = "tau") |>

brms::validate_prior(f, data = df)

m_logweibull <- brm(

f,

prior = priors,

data = df,

stanvars = rt_logweibull_stanvars(),

init = 0,

chains = 4, iter = 500, backend = "cmdstanr"

)

m_logweibull <- brms::add_criterion(m_logweibull, "loo")

saveRDS(m_logweibull, file = "models/m_logweibull.rds")Inverse Weibull (Shifted Fréchet)

Code

f <- bf(

RT ~ Condition,

sigma ~ Condition,

tau ~ Condition,

minrt = min(df$RT),

family = rt_invweibull()

)

priors <- brms::set_prior("normal(0, 1)", class = "Intercept", dpar = "tau") |>

brms::validate_prior(f, data = df)

m_invweibull <- brm(

f,

prior = priors,

data = df,

stanvars = rt_invweibull_stanvars(),

init = 0,

chains = 4, iter = 500, backend = "cmdstanr"

)

m_invweibull <- brms::add_criterion(m_invweibull, "loo")

saveRDS(m_invweibull, file = "models/m_invweibull.rds")Gamma

Code

f <- bf(

RT ~ Condition,

sigma ~ Condition,

tau ~ Condition,

minrt = min(df$RT),

family = rt_gamma()

)

priors <- brms::set_prior("normal(0, 1)", class = "Intercept", dpar = "tau") |>

brms::validate_prior(f, data = df)

m_gamma <- brm(

f,

prior = priors,

data = df,

stanvars = rt_gamma_stanvars(),

init = 0,

chains = 4, iter = 500, backend = "cmdstanr"

)

m_gamma <- brms::add_criterion(m_gamma, "loo")

saveRDS(m_gamma, file = "models/m_gamma.rds")Inverse Gamma

Code

f <- bf(

RT ~ Condition,

sigma ~ Condition,

tau ~ Condition,

minrt = min(df$RT),

family = rt_invgamma()

)

priors <- brms::set_prior("normal(0, 1)", class = "Intercept", dpar = "tau") |>

brms::validate_prior(f, data = df)

m_invgamma <- brm(

f,

prior = priors,

data = df,

stanvars = rt_invgamma_stanvars(),

init = 0,

chains = 4, iter = 500, backend = "cmdstanr"

)

m_invgamma <- brms::add_criterion(m_invgamma, "loo")

saveRDS(m_invgamma, file = "models/m_invgamma.rds")Linear Ballistic Accumulator

Code

f <- bf(

RT ~ Condition,

sigma = 1,

sigmabias ~ Condition,

bs ~ Condition,

tau ~ Condition,

minrt = min(df$RT),

family = rt_lba()

)

priors <- c(

brms::set_prior("normal(0, 1)", class = "Intercept", dpar = "mu"),

brms::set_prior("normal(0, 1)", class = "Intercept", dpar = "sigmabias"),

brms::set_prior("normal(0, 1)", class = "Intercept", dpar = "bs"),

brms::set_prior("normal(0, 1)", class = "Intercept", dpar = "tau")

)|>

brms::validate_prior(f, data = df)

m_lba <- brm(

f,

prior = priors,

data = df,

stanvars = rt_lba_stanvars(),

init = 0.5,

chains = 4, iter = 500, backend = "cmdstanr"

)

m_lba <- brms::add_criterion(m_lba, "loo")

saveRDS(m_lba, file = "models/m_lba.rds")Model Comparison

Model Fit

We can compare these models together using the loo package, which shows that CHOCO provides a significantly better fit than the other models.

loo::loo_compare(m_normal, m_exgauss, m_lognormal, m_wald,

m_weibull, m_logweibull, m_invweibull,

m_gamma, m_invgamma, m_lba) |>

parameters(include_ENP = TRUE)

#> # Fixed Effects

#>

#> Name | LOOIC | ENP | ELPD | Difference | Difference_SE | p

#> -------------------------------------------------------------------------------

#> m_lba | -4845.13 | 7.29 | 2422.56 | 0.00 | 0.00 |

#> m_invweibull | -4840.82 | 4.72 | 2420.41 | -2.15 | 5.95 | 0.717

#> m_logweibull | -4840.37 | 5.03 | 2420.18 | -2.38 | 6.00 | 0.691

#> m_invgamma | -4831.71 | 8.31 | 2415.85 | -6.71 | 6.22 | 0.281

#> m_exgauss | -4792.89 | 6.62 | 2396.45 | -26.12 | 9.53 | 0.006

#> m_lognormal | -4763.00 | 8.80 | 2381.50 | -41.06 | 11.08 | < .001

#> m_wald | -4728.73 | 10.84 | 2364.36 | -58.20 | 15.07 | < .001

#> m_gamma | -4393.23 | 7.53 | 2196.61 | -225.95 | 24.80 | < .001

#> m_weibull | -3680.39 | 9.33 | 1840.19 | -582.37 | 38.96 | < .001

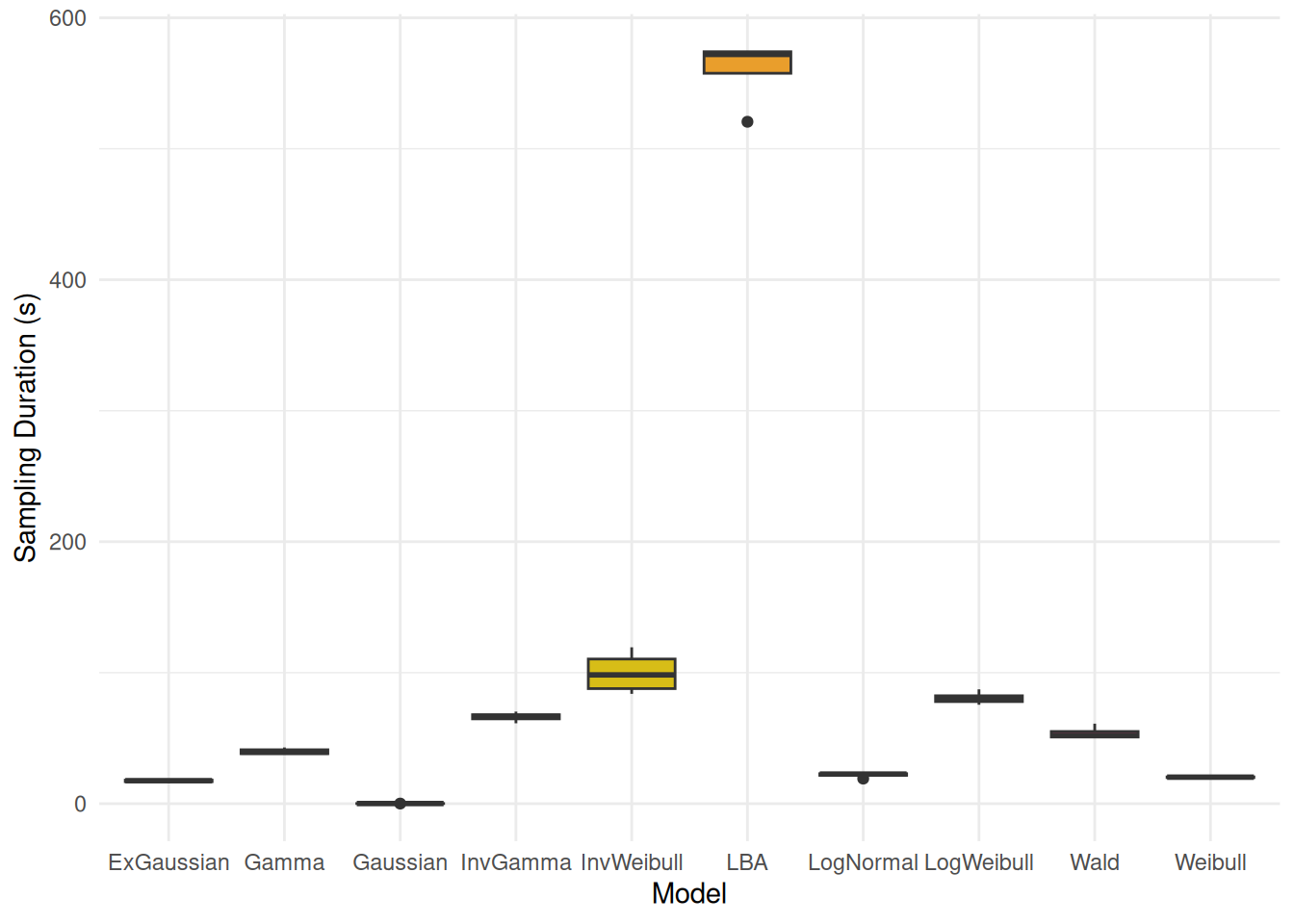

#> m_normal | -1904.18 | 7.82 | 952.09 | -1470.47 | 73.27 | < .001Sampling Duration

rbind(

data_modify(attributes(m_normal$fit)$metadata$time$chain, Model="Gaussian"),

data_modify(attributes(m_exgauss$fit)$metadata$time$chain, Model="ExGaussian"),

data_modify(attributes(m_lognormal$fit)$metadata$time$chain, Model="LogNormal"),

data_modify(attributes(m_wald$fit)$metadata$time$chain, Model="Wald"),

data_modify(attributes(m_weibull$fit)$metadata$time$chain, Model="Weibull"),

data_modify(attributes(m_logweibull$fit)$metadata$time$chain, Model="LogWeibull"),

data_modify(attributes(m_invweibull$fit)$metadata$time$chain, Model="InvWeibull"),

data_modify(attributes(m_gamma$fit)$metadata$time$chain, Model="Gamma"),

data_modify(attributes(m_invgamma$fit)$metadata$time$chain, Model="InvGamma"),

data_modify(attributes(m_lba$fit)$metadata$time$chain, Model="LBA")

) |>

ggplot(aes(x = Model, y = total, fill = Model)) +

geom_boxplot() +

labs(y = "Sampling Duration (s)") +

scale_fill_material_d(guide = "none") +

# scale_y_log10() +

theme_minimal()

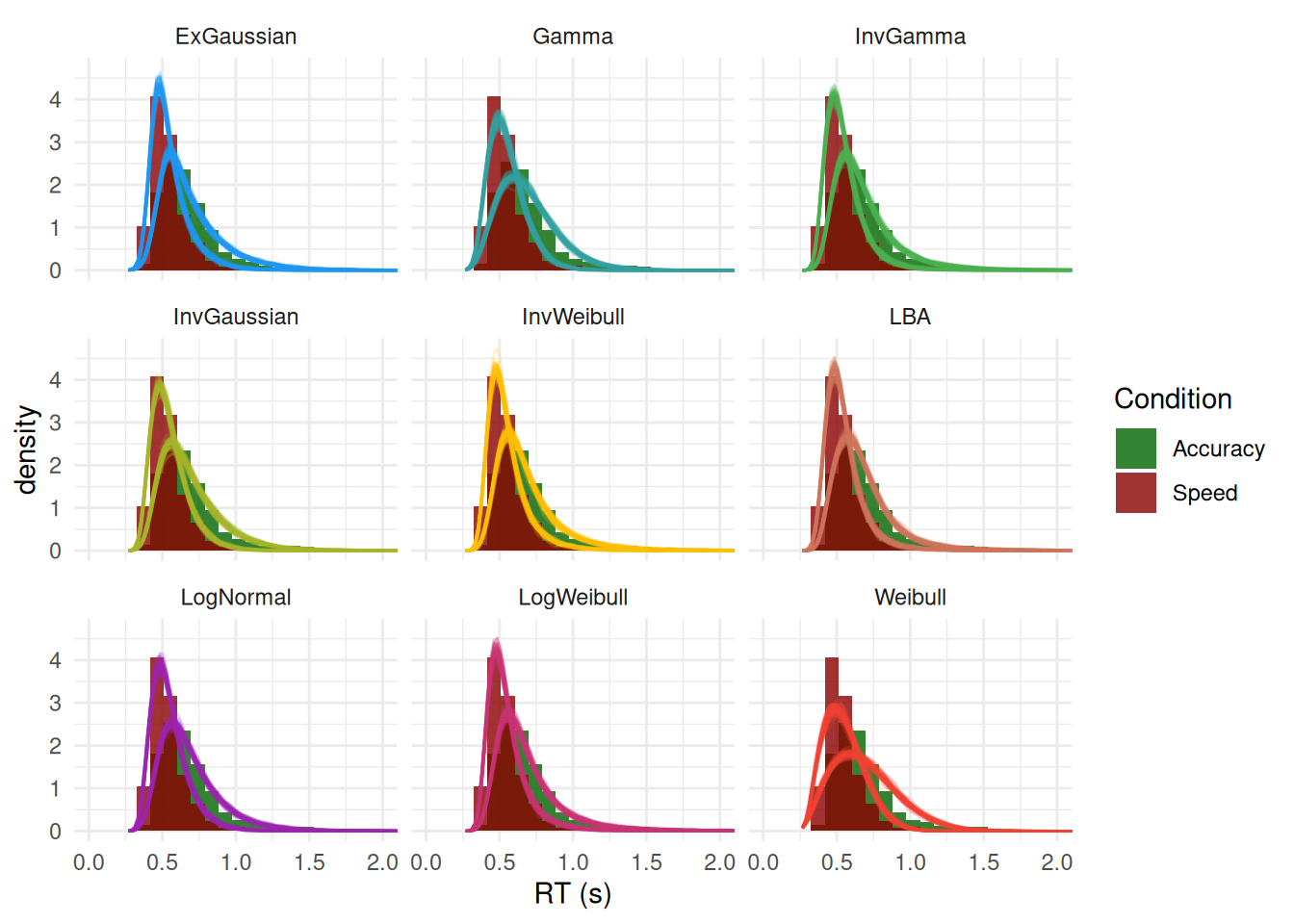

Posterior Predictive Check

iterations controls the actual number of iterations used (e.g., for the point-estimate) and keep_iterations the number included.

Code

pred <- rbind(

# estimate_prediction(m_normal, keep_iterations = 50, iterations = 50) |>

# reshape_iterations() |>

# data_modify(Model = "Normal"),

estimate_prediction(m_exgauss, keep_iterations = 50, iterations = 50) |>

reshape_iterations() |>

data_modify(Model = "ExGaussian"),

estimate_prediction(m_lognormal, keep_iterations = 50, iterations = 50) |>

reshape_iterations() |>

data_modify(Model = "LogNormal"),

estimate_prediction(m_wald, keep_iterations = 50, iterations = 50) |>

reshape_iterations() |>

data_modify(Model = "InvGaussian"),

estimate_prediction(m_weibull, keep_iterations = 50, iterations = 50) |>

reshape_iterations() |>

data_modify(Model = "Weibull"),

estimate_prediction(m_logweibull, keep_iterations = 50, iterations = 50) |>

reshape_iterations() |>

data_modify(Model = "LogWeibull"),

estimate_prediction(m_invweibull, keep_iterations = 50, iterations = 50) |>

reshape_iterations() |>

data_modify(Model = "InvWeibull"),

estimate_prediction(m_gamma, keep_iterations = 50, iterations = 50) |>

reshape_iterations() |>

data_modify(Model = "Gamma"),

estimate_prediction(m_invgamma, keep_iterations = 50, iterations = 50) |>

reshape_iterations() |>

data_modify(Model = "InvGamma"),

estimate_prediction(m_lba, keep_iterations = 50, iterations = 50) |>

reshape_iterations() |>

data_modify(Model = "LBA") |>

data_filter(iter_value < 2)

)

#> Loading required namespace: rstan

p <- pred |>

ggplot(aes(x=iter_value)) +

geom_histogram(data = df, aes(x=RT, y = after_stat(density), fill = Condition),

position = "identity", bins=120, alpha = 0.8) +

geom_line(aes(color=Model, group=interaction(Condition, iter_group)), stat="density", alpha=0.2) +

theme_minimal() +

facet_wrap(~Model) +

coord_cartesian(xlim = c(0, 2)) +

scale_fill_manual(values = c("darkgreen", "darkred")) +

scale_color_material_d(guide = "none") +

labs(x = "RT (s)")

p